Business Problem:

Visakhapatnam also known as Vizag, is the largest city in the Indian state of Andhra Pradesh and headquarters of the Visakhapatnam district and one of the four smart cities of Andhra Pradesh. In addition having great history, Vizag also have adorable climate for being situated between the Eastern Ghats and the coast of the Bay of Bengal which makes Vizag a tourism destination for many tourists for leisure activities.

The target audience for this project are tourists, helping them to explore the city to find out what are popular venues and cityscapes such as Neighbourhoods and Landmarks on their own instead of reaching out to a travel guide which puts additional cost in their budget.

Data

This section includes description of the data and how it will be used to solve the problem.

Clearly, to solve the above business problem we need location data. What is location data? Location data is data which describes places and venues, such as their geographical location, their category, working hours, full address, and so on, such that for a given location given in the form of its geographical coordinates (or latitude and longitude values) one is able to determine what types of venues exist within a defined radius from that location.

So, for a given location you will be able to tell which place exists nearby, it may be any place which is present in the data. The location data providers are i)Foursquare, ii)Google Places, iii)Yelp.

The best way to choose a Location data providers by considering these factors:

1)Rate Limits, 2)Costs, 3)Coverage, 4)Accuracy, 5)Update Frequency. Foursquare update their data continuously, whereas other providers would update their data either daily or weekly depending on their location data.

Data Collection:

Foursquare:

But as far as this project is concerned, we will use the Foursquare location data set since their data set to be the most comprehensive so far.Also creating a developer account to use their API is quite straightforward and easiest compared to other providers. We’ll explore the so called city by leveraging location data to find out the venues.

Web Scraping:

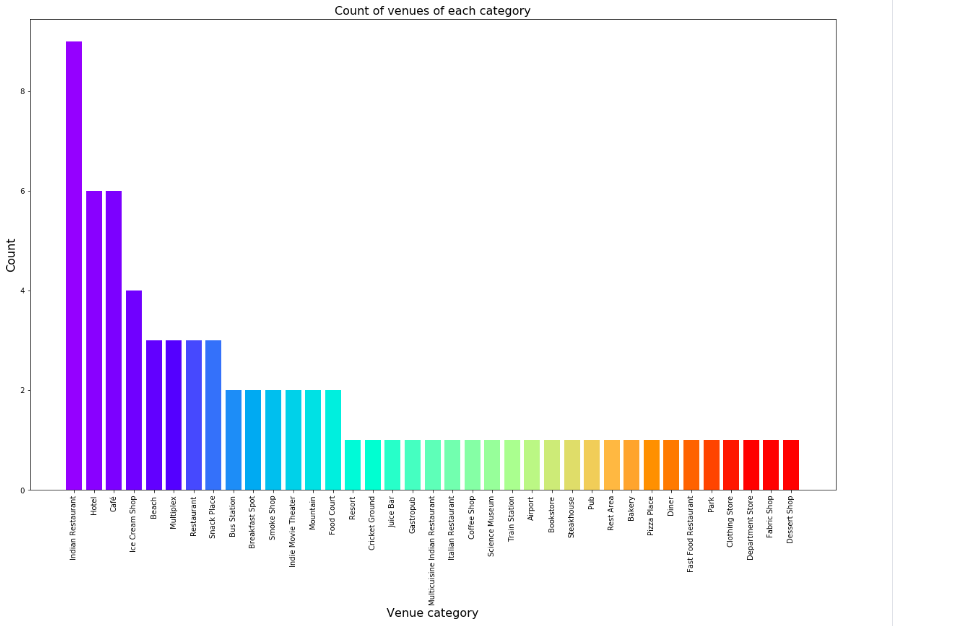

To extract the Cityscapes i.e., Neighbourhoods and Landmarks we’ll use BeautifulSoup package a Python library for getting data out of HTML, XML, and other markup languages.

Methodology

Methodology section represents the main component of the report where we’ll identify and explore the venues and display those venues with their respective addresses, upto 10 km as well as we’ll display the Neighbourhoods and Landmarks of Visakhapatnam city.

This would enable any visitor/tourist to identify the venues he/she wants to visit on their taste.

As a first step, we will retrieve the data from Foursquare API. We extract venue information from the center of Visakhapatnam, within 10 Km range.

Secondly, we will extract data cityscapes i.e., Neighbourhoods and Landmarks from wikipedia page: http://en.wikipedia.org/wiki/Visakhapatnam

Next, we’ll display places where venues are located and cityscapes so that any visitor can go to any place and enjoy the option to explore many venues and places on his/her own without the need of reaching out for a tourist guide.

Finally, we’ll impose Venues and cityscapes on the Visakhapatnam map.

We’ll perform exploratory data analysis to find out the popular restaurants and category of the restaurants.

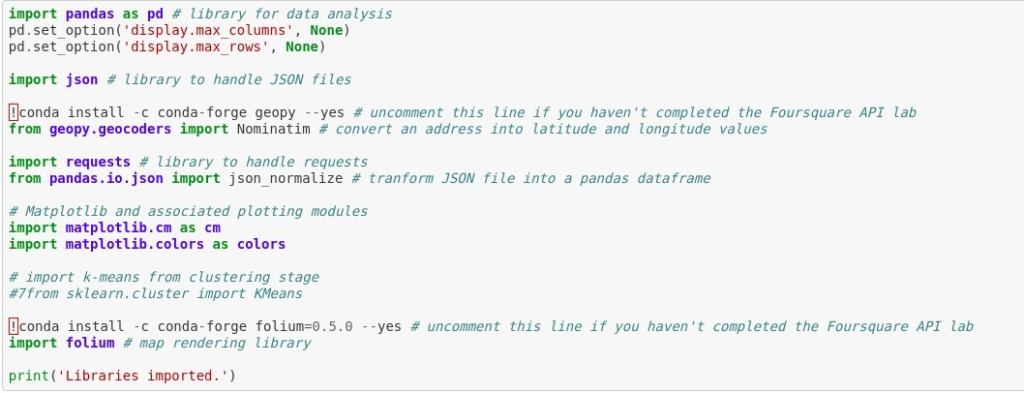

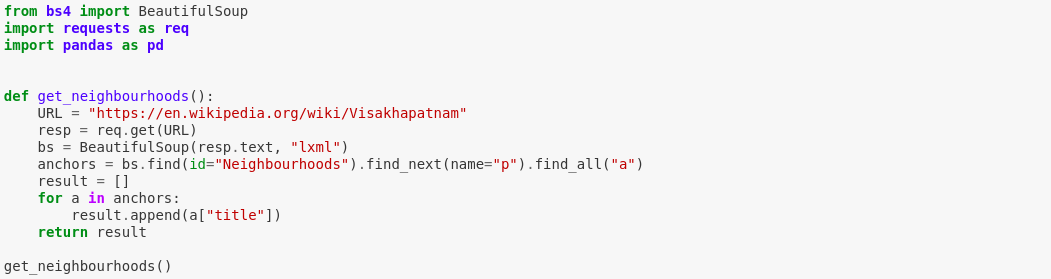

Before we start with the main content, let’s download all the dependencies that we will need.

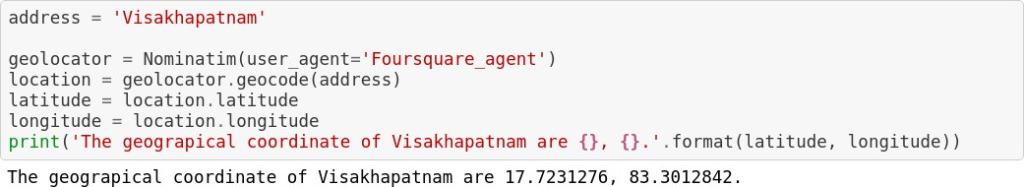

We begin by displaying geographical coordinates of the Visakhapatnam by using geocoder and nominatim to convert an address to it’s respective latitude and longitude.

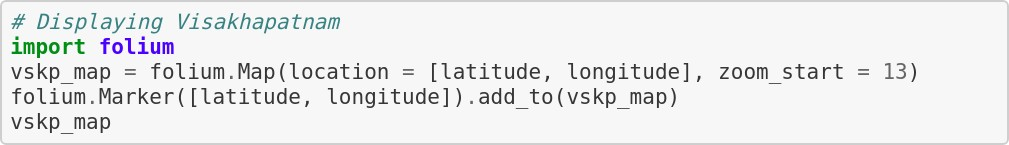

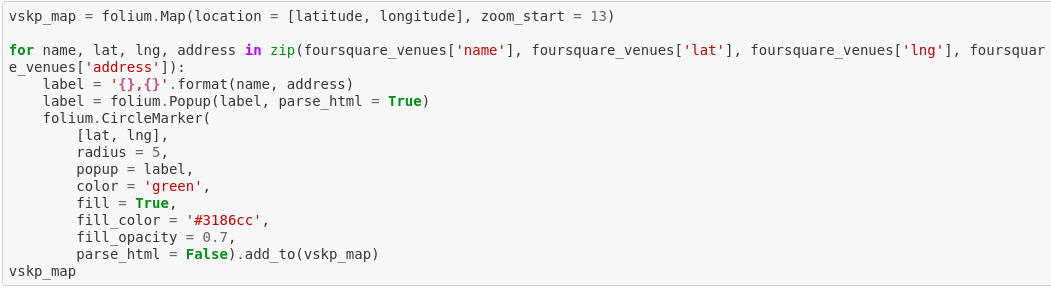

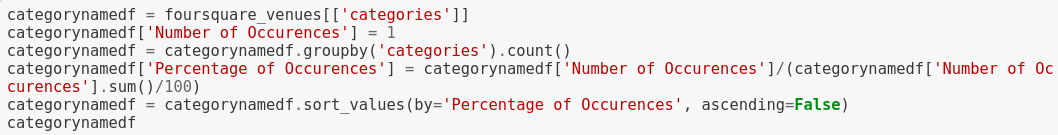

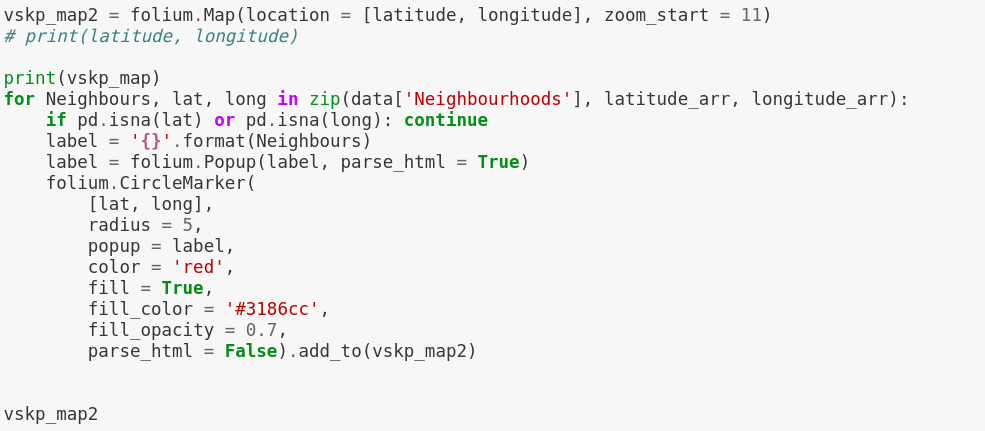

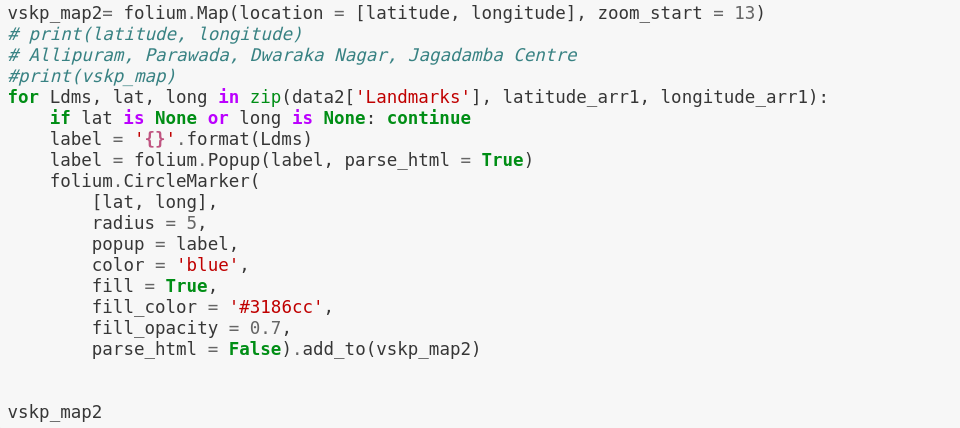

We can examine the Visakhapatnam map using Folium: a map rendering library which will impose Venues and address on top of it.

Foursquare API:

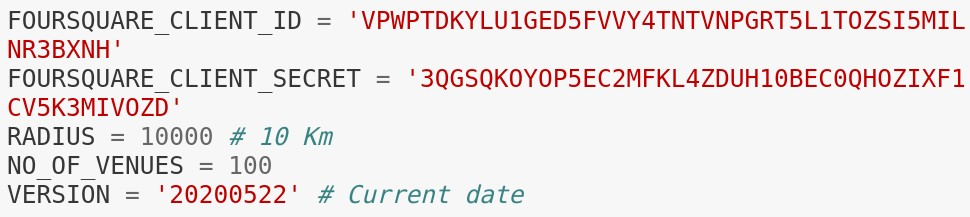

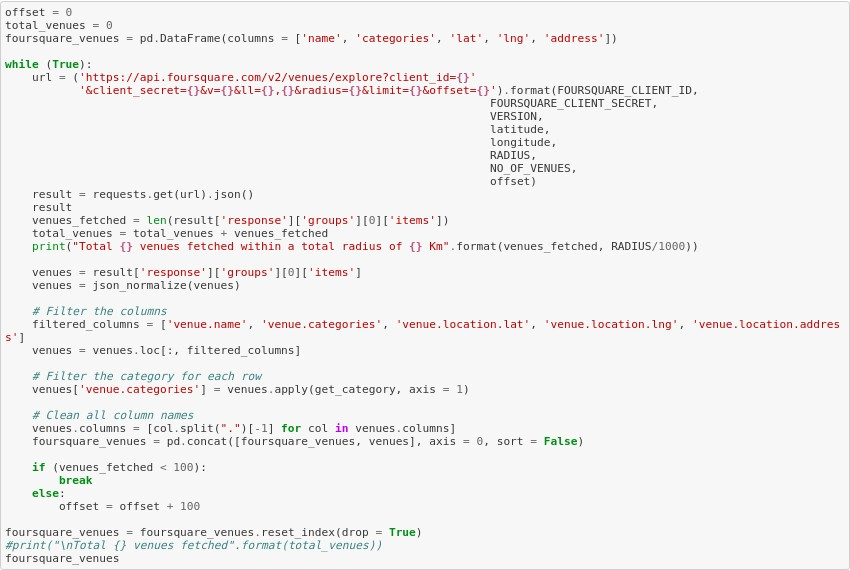

We will fetch all venues in Visakhapatnam upto a range of 10 Kilometers from the center of the city using the Foursquare API. The Foursquare API has the explore API which allows us to find venue recommendations within a given radius for the given coordinates. We will use this API to find all the venues.

In order to access Foursquare data through API call we need to get an account. For this project we used developer account which is free of cost.

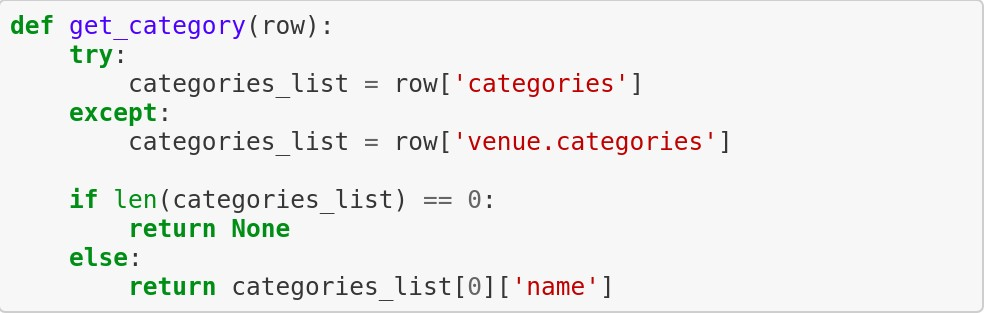

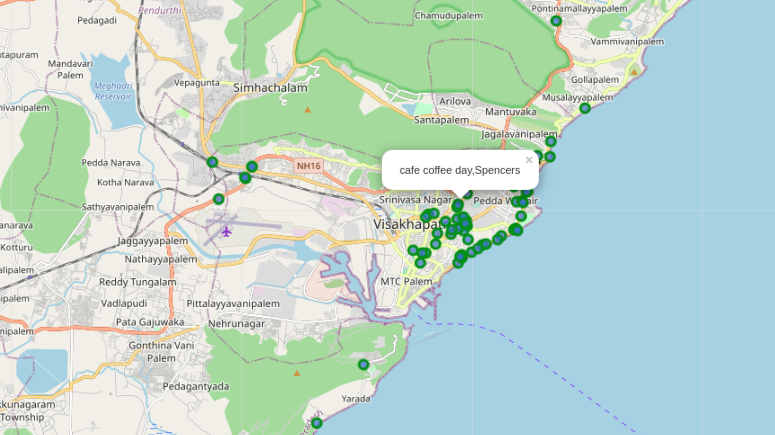

Function: get_category() to extract category names of the venues, as we know data we’ll be in the form of keys.

We’ll call the API over and over till we get all venues within the given distance. The maximum venues this API can fetch is 100, so we will fetch all venues by iteratively calling this API and increasing the offset each time.

Foursquare API requires client_id, and client_secret to function which can be accessed by creating a developer account. We will set the radius as 10 Kilometers. The version is a required parameter which defines the date on which we are browsing so that it retrieves the latest data.

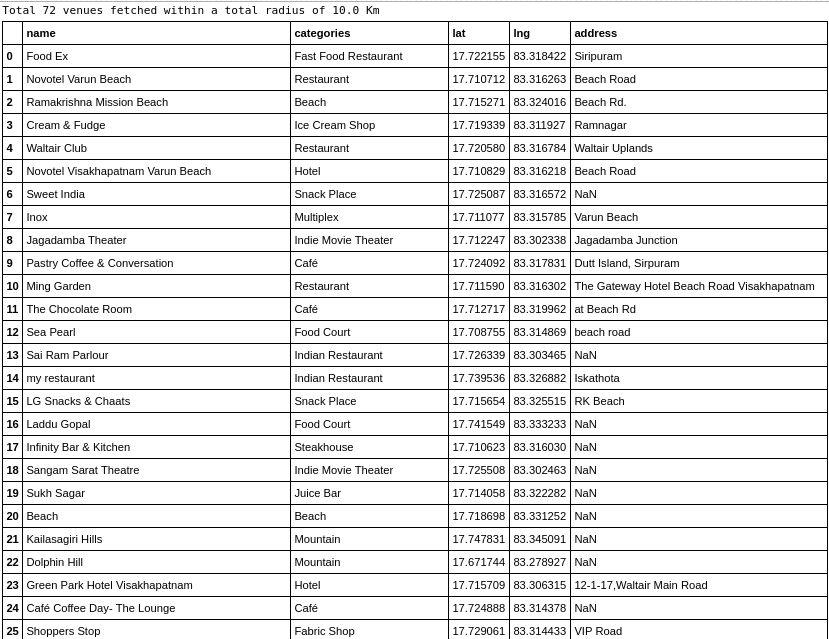

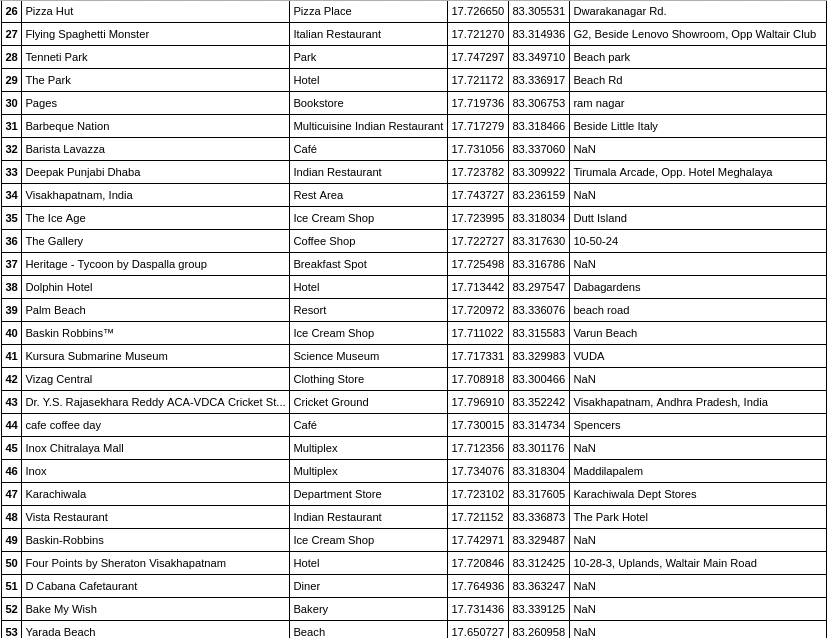

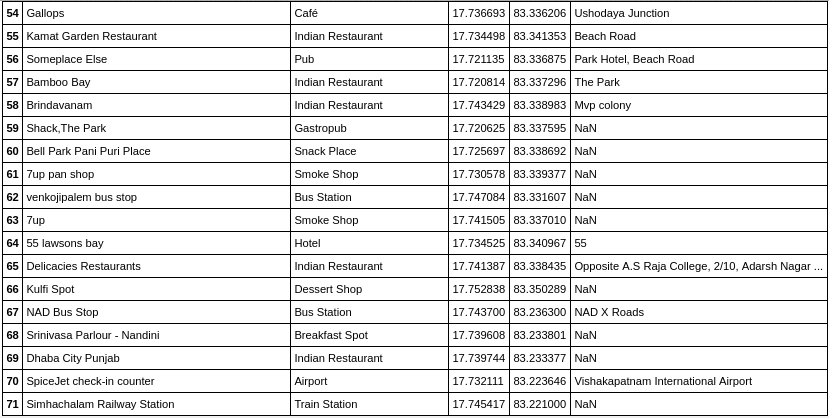

Venues fetched are:

Let’s visualize these Foursquare data items on the Visakhapatnam map.

Exploratory Data Analysis:

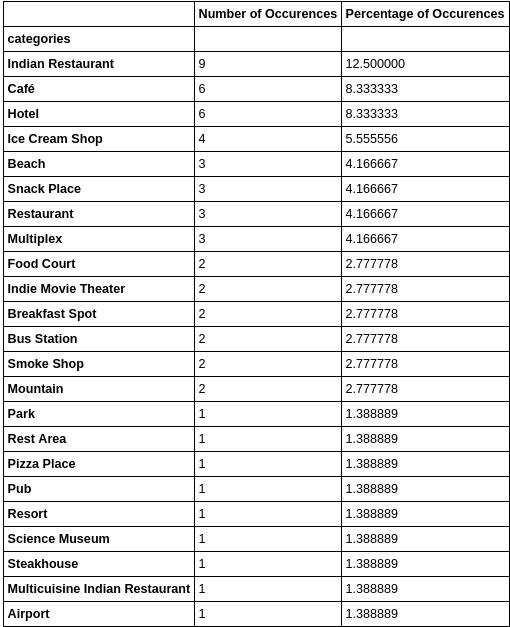

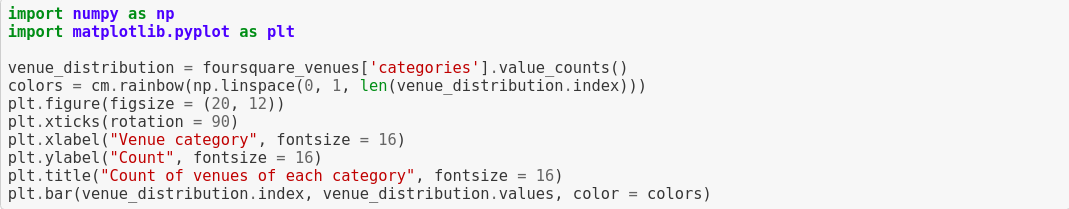

By using the Foursquare API we obtained information of the closest venues to the location coordinates which were provided. The information about these venues were then stored in a data frame Pandas a Python data analysis tool kit. The next stage of data analysis involves grouping these venues into categories and then determining what were the most and least prevalent types of venues in the Visakhapatnam city.

Let’s create a bar plot:

It’s evident that Indian restaurants are more popular in Visakhapatnam, tourists must make a preference to visit Indian restaurant to experience the gustatory delights of Indian cuisine. From the above table and graph they can decide which venue to visit and which one not to visit.

Web Scraping: Beautiful Soup

To understand simply, say you’vefound some webpages that display data relevant to you but that do not provide any way of downloading the data directly. Beautiful Soup helps you pull particular content from a webpage, remove the HTML markup, and save the information.

From this wikipedia [link]: https://en.wikipedia.org/wiki/Visakhapatnampage we are going to extract Cityscapes section such as Neighbourhoods and Landmarks.

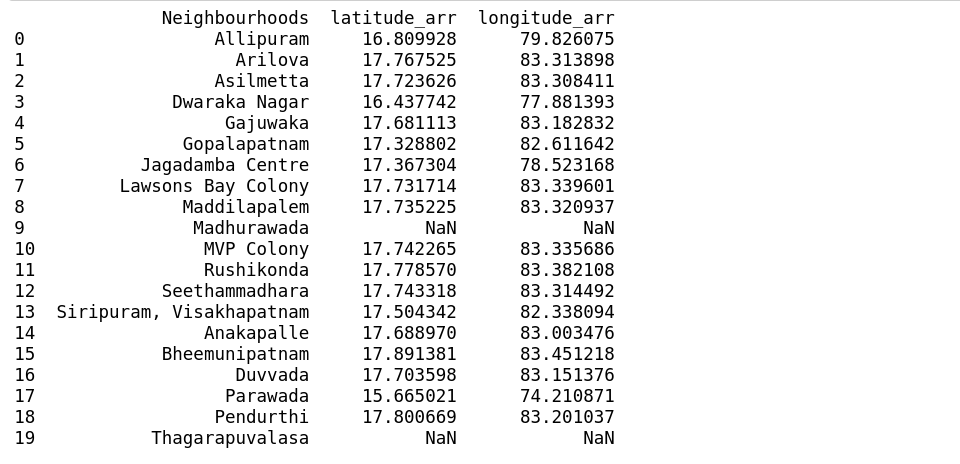

- Neighbourhoods: Allipuram, Arilova, Asilmetta, Dwaraka Nagar, Gajuwaka, Gopalapatnam, Jagadamba Centre, Lawsons Bay Colony, Maddilapalem, Madhurawada, MVP Colony,Rushikonda,Seethammadhara, Siripuram, Visakhapatnam,Anakapalle,Bheemunipatnam,Duvvada,Parawada,Pendurthi, Thagarapuvalasa.

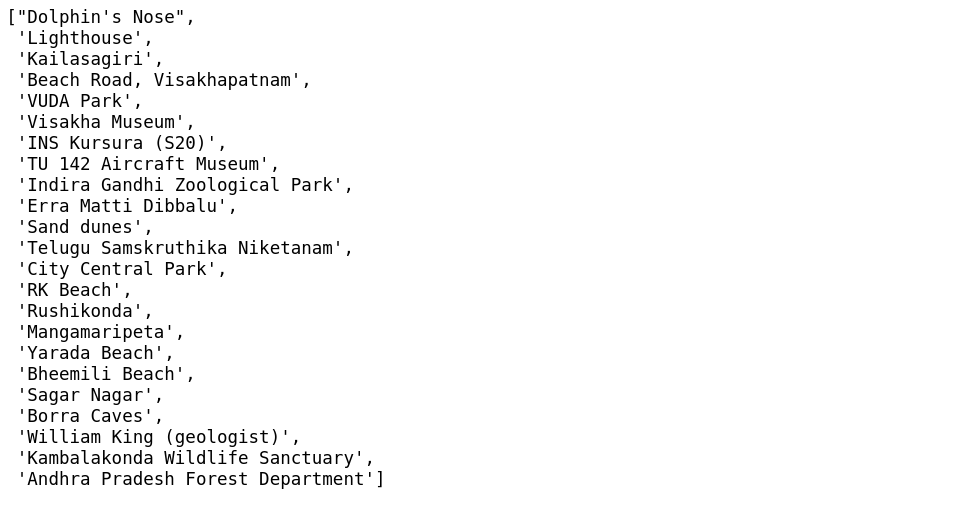

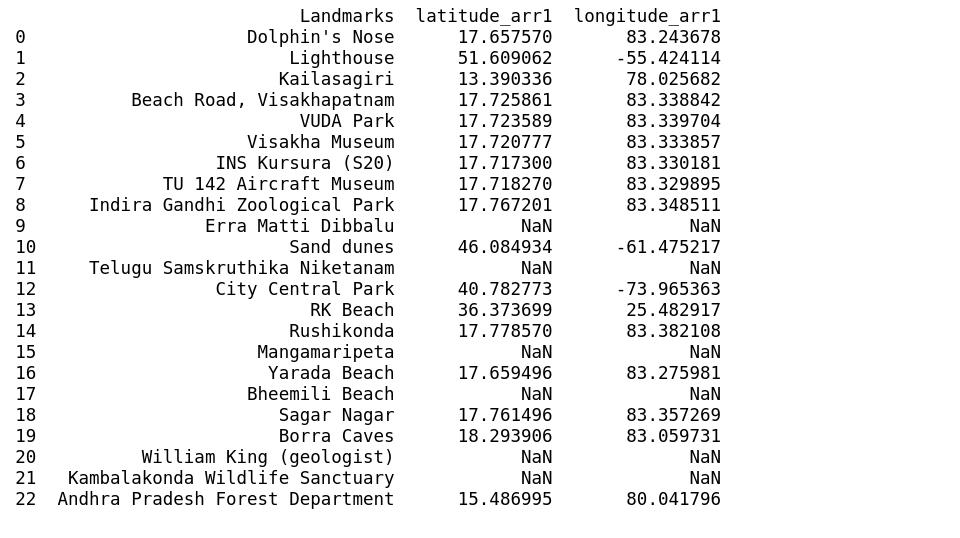

- Landmarks: Dolphin’s Nose, Lighthouse, Kailasagiri, Beach Road, Visakhapatnam, VUDAPark,Visakha Museum, INS Kursura (S20), TU 142 Aircraft Museum, Indira Gandhi ZoologicalPark,Erra Matti Dibbalu, Sand dunes,TeluguSamskruthika Niketanam, City CentralPark,RK Beach,Rushikonda,Mangamaripeta,YaradaBeach, Bheemili Beach, Sagar Nagar, Borra Caves, William King (geologist), Kambalakonda Wildlife Sanctuary, Andhra PradeshForestDepartment.

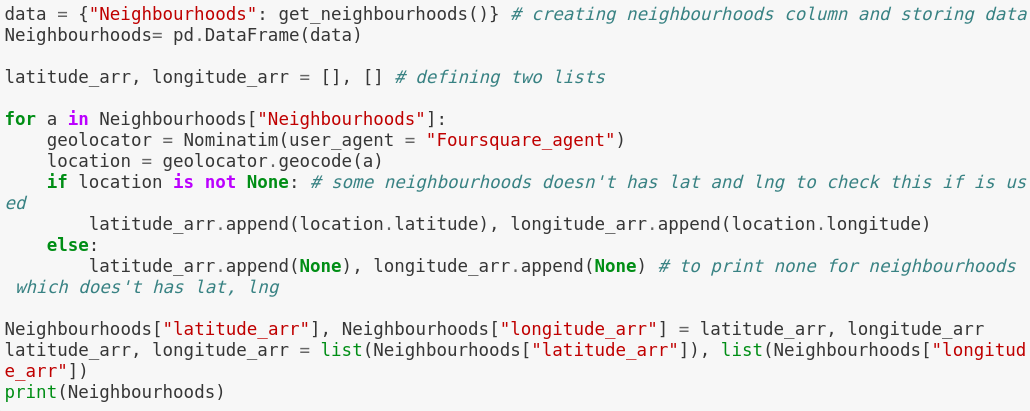

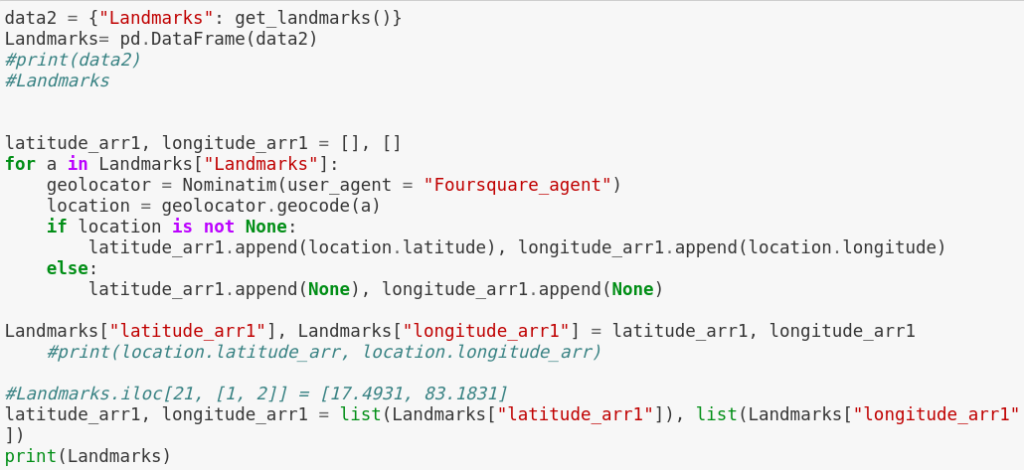

To display theseattractions on to the Visakhapatnam map we don’t have latitude and longitude readily available. So, we’ll be using geopy.geocoder and Nominatim to convert these addresses into latitude and longitude coordinates and then we’ll pass these coordinates in to folium to impose these places on to the Vizag map which we have done earlier in this project.

get_neighbourhoods() function: extracts all the anchor tags in the neighbourhood section from the wikipedia page

We have retrieved all the neighbours of vizag city.

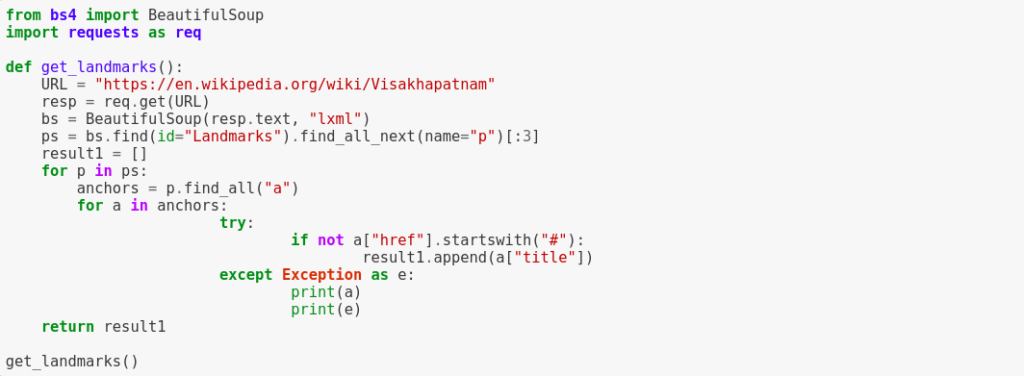

get_landmarks() function will extracts all the anchor tags in landmarks section:

Actually there are three paragraphs in Landmarks section and each section has citations. A citation is the way you tell your readers that certain material in your work came from another source. It also gives readers the information necessary to find that source again, including: information about the author, the title of the work.

While doing so parser is unable to parse through the code so, that’s why the try and except block is used to catch and handle exceptions. Python executes code following the try statement as a“normal”part of the program (we are taking title of the anchor tag). The code that follows the except statement is the program’s response to any exceptions in the preceding try clause.

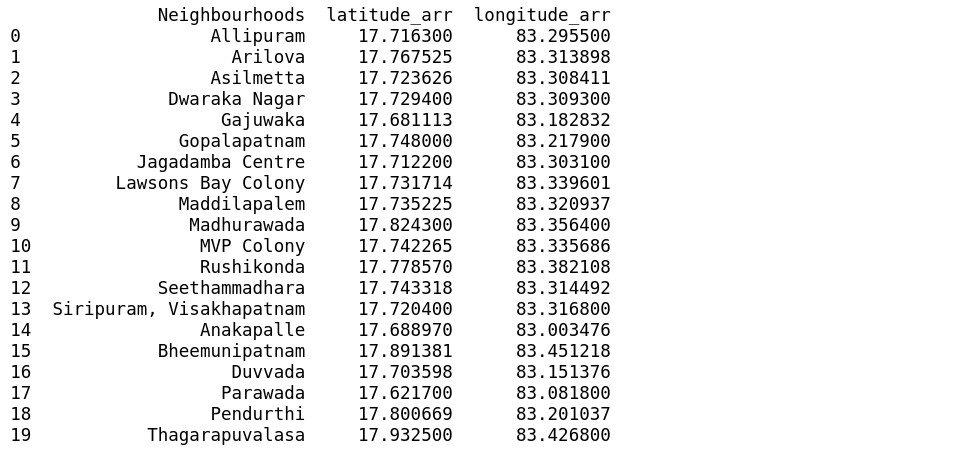

Let’s call get_neighbourhoods() function and store in Neighbourhoods data frame and pass it to geo locator to get the geographical coordinates.

Visakhapatnam latitudes start with ’17’ and longitudes start with ’83’.

As we can see it doesn’t satisfy above criteria and also has NaN values this may be for a variety of reasons like same place name exists in some other area and also maybe foursquare doesn’t have these data.

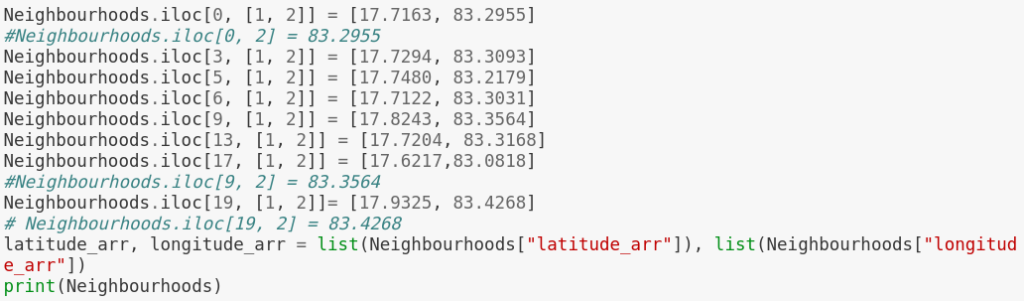

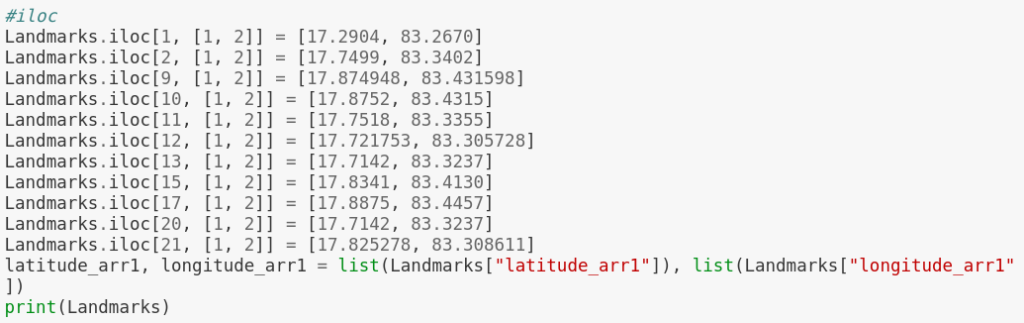

Using iloc method to correct the false data.

iloc gets rows (or columns) at particular positions in the index (so it only takes integers).

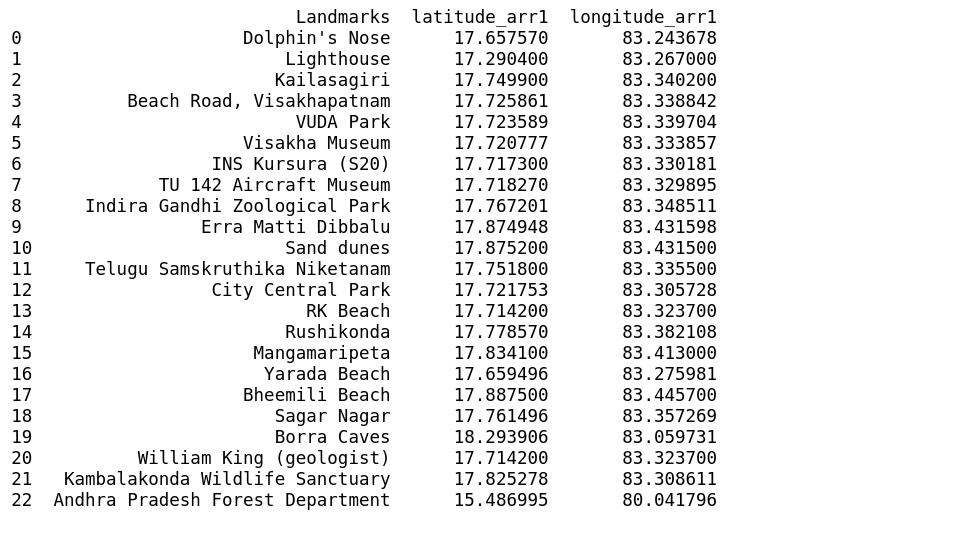

Neighbourhoods of Visakhapatnam along with lat and lng.

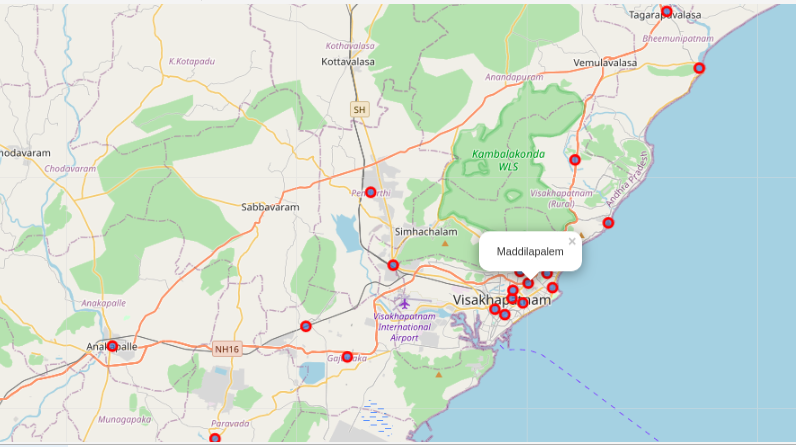

Map displaying Neighbourhoods

Let’s call get_landmarks() function to extract all the landmarks.

Entering latitude and longitude manually into the rows where data is not true by using iloc[] method

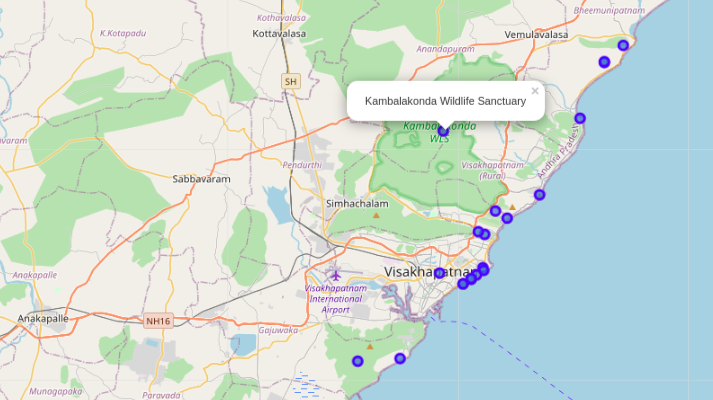

Landmarks of Visakhapatnam.

Results and Discussions:

Based on our analysis above, we can draw a number of conclusions that will be useful to aid any visitor visiting the city of Visakhapatnam, India.

After collecting data from the Foursquare, we got a list of 72 different venues. We identified that from the total set of venues, majority of them were Indian Restaurants. A visitor who loves Indian food would surely like to visit Visakhapatnam.

Finally,through clusters we identified that there are many venues which are clustered around’Varunbeach’, ‘Rk beach’, ‘Siripuram’, ‘SampathVinayakatemple’, ‘MVP colony’, ‘Diamond park’, ‘Beach road’.

On the other hand, we have visualized Neighbourhoods and Landmarks. Interestingly, most of the Landmarks are scattered at the beach corridor.

If you’re looking for Indian restaurants to taste Indian food Visakhapatnam can serve wide array of varieties to enjoy the food.

Conclusion:

The purpose of this project was to explore the places that a person visiting Visakhapatnam could visit. The venues have been identified using Foursquare and have been plotted on the map along with that Neighbourhoods and Landmarks also plotted. The map reveals that there are seven major areas a person can visit: Varun beach, RK beach, Siripuram, Sampath Vinayaka temple, MVP colony, Diamond park and Beach road. Based on the visitor’s reachability, he/she can choose amongst the places. If a touristor visits beach corrider more likely will cover up many places to be visited including popular venues also including landmarks.

Awesome information and very awful themes and the page is truly official